Data Driven

Manufacturing

Intelligence

WATS Test Data Management

for OEMs and CMs

Recent Highlights

Collect and visualize your test & repair data

Integrate any test and repair station to WATS, and democratize your test data. From your entire supply chain. Quickly identify production yield issues, frequent test or product failures, poor test coverage or other performance problems. In real-time, directly through your web browser or mobile device.

Join thousands of engineers and managers worldwide

Improve processes & automate monitoring

WATS helps you gain new smart insights from your manufacturing data. Insights you can use to better understand and evolve your existing test processes and product designs. Make these new insights operational with advanced process monitoring rules and automated distribution intelligence. WATS uses data analysis to provide Manufacturing Intelligence.

Integrated software stack for Industry 4.0

Advanced sequencer integrations help turn your existing test systems into modern Industrial IoT devices. The WATS Rest API help ensure that your enterprise software stack is fully integrated and optimized from top to bottom or your Unified Namespace, ready for the digital transformation journey.

Virinco and WATS are Award Winners

“Frost & Sullivan finds Virinco’s seamless and frictionless approach and close customer relationships position it as a partner of choice to automate and digitalize the electronics manufacturing test with WATS.”

Samantha Fisher, Best Practices Research Analyst at Frost & Sullivan

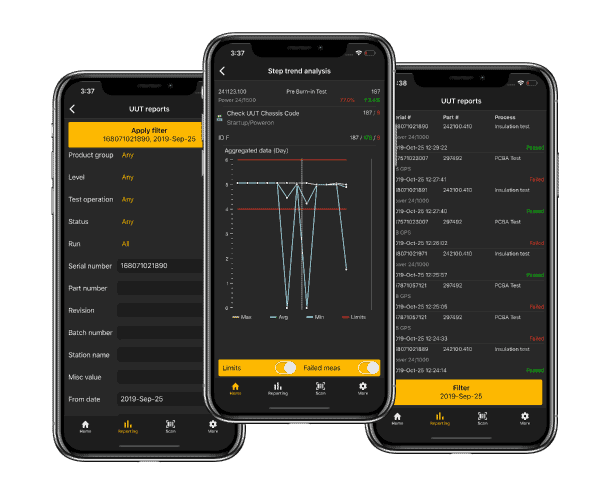

WATS for Mobile

Access data from your manufacturing sites directly on your mobile phone.

WATS for Mobile offers an overview of yields, volumes, and drill-down capabilities to see failed tests and trends. And a built-in scanner to quickly access test history of your products. More information

Download WATS for Mobile for free: